My Annual Re-appraisal of "AI"

Searching for the nuance

Here’s my initial reaction to this phenomenon from 2022 in which I was fairly alarmed.

Let’s see how nuanced I can be now.

1. I’m talking about LLM transformer models (One of my friends who knows how I butchered that can correct me) that have been given the marketing label “AI”.

2. I don’t know how to ethically process the fundamentally gross feeling concerning the non-consensual use of the data that the world has thus far produced. This reminds me of the old canard about when it changes from grave robbing to archeology. The answer is that there is no bright line, and that it’s a continually negotiated and contextual answer. As a society, most people seem to be ok with the current formula that intellectual property protections should run out at some point and enter ‘the public domain’. What the current crop of “AI” companies are saying with their actions is that in their context ALL IP is public domain. For them. Right now. But not their code, obviously.

I’m trying to imagine a decade or two down the road, when we’ve got much more solid (maybe more stable) models that have been trained on the world’s data, and are providing some unalloyed good for humanity. I don’t know what that is yet, but let’s pretend we’re there. Are there going to be ethical hold-outs who refuse to engage or benefit from that good because of the actions these companies took in the early 20’s?

Like… we live on stolen land from people that our ancestor’s genocided. This is the case for almost all humans. Those of you reading this are using devices made possible and affordable by virtual -if not literal- slavery and the immiseration of a huge chunk of the world’s population. Somehow, most of us find ways to compromise with the context in which we find ourselves. Compared to these two examples, IP theft seems insignificant. This is not an argument for an Ought. It’s my prediction for what will be an Is.

One thing that is making the ethical dilemma so intense is that currently a lot of people are NEED their IP to eat, pay rent and have medical care. I’m sure the intensity would change if this were not the case.

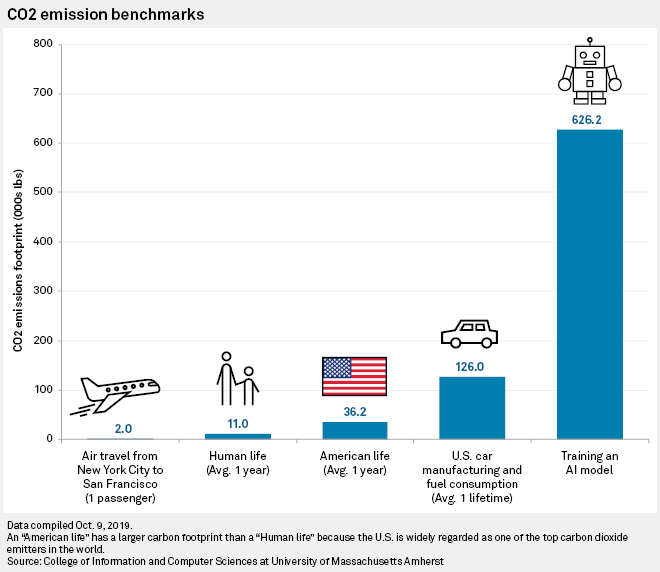

3. The environmental impact is currently immense. Most projections I’ve seen is that this impact will be diminishing eventually. It’s also important to factor in what OTHER environmental damage is being offset by using “AI” right now. (eg if running an “AI” does the work of 100 people, all the energy required to power 100 people can be subtracted from the overall impact calculous. Those 100 people will, presumably, still be alive; so that impact doesn’t disappear from the world, just from the specific application the “AI” is doing.

This is obviously the dream scenario that all those billions are being bet on. But what we are currently seeing is... not that. And that’s ok. I’m not judging what can be based on what currently is. But I’m also aware that heavily fanatically incentivized exaggeration is a thing.

4. I don’t like the “AI” bubble that is threatening to blow up the world economy. But I don’t think that is relevant or intrinsic to the technology itself. We can blow up the economy with real estate and tulips, so I’m not pinning the danger on the tech. That’s a government and business ethics situation.

5. LLMs are intrinsically deceptive to a lot of people. For those of us who care enough to try to understand them, this isn’t that big a deal. But it’s becoming apparent that many people fundamentally misunderstand the nature of these tools and interpret them as real minds that have thoughts and access to Truth. They are forming very deep ‘relationships’ with them. They are trusting the fawning feedback about their thoughts as validation with authority. They are implementing them in business and law and government as though these systems do anything other than provide responses that sound like answers.

Besides the obvious risk, injustice, and psychological derangement we’re starting to see, the fact that these misperceptions are vital fuel in the fire of the “AI” bubble makes the whole situation incredibly disturbing to me. And I haven’t even mentioned the political ramifications of having no images, audio video or words be evidence of reality!

6. I’ve been asking for years if anyone I know can tell me how the LLM hallucination problem can be solved, and haven’t found any of the explanations compelling. Obviously, as a non-expert, I weigh my feeling about this very low on the pseudo-Bayesian scale I try to enforce on myself. But I also discount the explanations because hype is a real thing that does real things to real brains.

If, as I suspect, the hallucination problem cannot be fixed, this drastically limits the potential application of LLMs.

Here is a recent third-party summary, then company summary, then actual paper regarding the hallucination problem.

This is incredibly limiting unless there is a cultural shift towards an uber-rationalist approach to risk and blame. The classic self-driving car apologia goes: “Yes, some % of people will die because of bugs or ethical rule sets programmed into the vehicles, but the total amount of deaths will be much lower than it currently is.” But for whatever reason, humans have a much stronger emotional reaction to one of these kinds of death than the other. If that could be changed, then the potential application of LLMs -including their expected hallucinations- could be broadened. In this world of imagination, we accept the 10-20% disastrous outcomes because the overall efficiency has increased so much that we find the trade-off worth it. But listen. We can’t even get most people to understand that lab cultured meat is infinitely LESS disgusting than factory farm meat. So I don’t have high hopes here.

7. LLMs give people the ability to do a lot more things at a mediocre level. Great, now all of us can illustrate birthday cards, give dating advice, and program an app. As long as the quality of that output isn’t particularly important, we can all be mediocre Leonardo DaVincis. This is another element that could change radically if the hallucination problem is solved.

The really interesting part of this for me is how indie artists and other creatives can now release a ‘whole package’. Most bands can’t afford to hire a professional artist to make cover art for them. Most authors are in the same position. Most YouTubers can’t afford to hire a musician to write a theme song for them or a team of videographers to shoot a bunch of specific b-roll. You get the picture.

Now that these jobs can be done virtually ‘for free’, the indie and professional layers of artistic creatives is becoming harder to distinguish. (for most people. A lot of artists can still spot gen AI cover art from a mile away.) So before, most indie authors had less-than-professional-looking covers, and that was a signal to the market that there’s a high chance the book will also be poorly edited and suffer from the lack of other quality control. This, of course, is unfair to some indie authors. The inverse mechanism also meant that actually-low-talent authors who had secured publishing deals could deceive you about the quality of the book because they had a $10,000 cover art. My point is that for individual artists/creatives, the power to create the perception that you are rungs above your actual status is cool. But also fleeting. As more and more of them learn this trick, it’s going to stop working because other signals or curation mechanisms will probably evolve.

From an ethical perspective, one response to the indie artist/creative goes like this. “If you can’t afford to pay a cover artist or musician, you should simply make due with low quality you CAN afford. Pay your dues, build your brand the old fashioned way, and eventually you’ll be successful enough to pay for the art you want to grace the cover of your book or album.”

This is related to the argument about living wages for small businesses. “If you can’t afford to pay your employees living wages, you’re business plan is not valid because it’s dependent on the externalities of stress and suffering for your employees.”

For the sake of the most good faith arguments I can muster, I’m going to be setting aside the cases of specific-living-artist-style prompts. (Like, “Give me a cover for my book in the style of Studio Ghibli”, or “Make me an album cover in the style of Dan Seagrave.) The only possible way to ethically justify this is in the context where people don’t need income to survive and thrive. And even then it’s a loooong stretch.

So I do think there’s a difference in kind between prompts that summon the stolen techniques of a living artist and the kind that ask “Make a book cover of an autumnal forest with a moss covered ruined castle in a pointillist style.” While both kinds rely on non-consensual data extraction, this one is far more likely to be finely mixing the ingredients with things that most people would consider firmly in the public domain. Is this a kind of art-theft-washing? Of course. But it’s impact on artists to protect what makes them special and their ability to make an income is far less.

The other side of this coin is that there’s a whole ecosystem of beginner/learning, and lower talent artists who can’t hope to compete in a market that has “virtually free” mediocre art. This is a pattern we’re seeing in many sectors of the economy. The first thing LLMs can replace are the wrote, repetitive drudgery in any given profession. This effectively means that there’s no reason for companies to hire juniors. Doing the boring repetitive stuff is typically where they would learn the ropes. At some point companies are going to figure out that they can’t exclusively hire an increasingly aging pool of very experienced people, so they will have to bite the bullet and accept the fact that training juniors is going to simply need to be part of their budget. But this is not true in the art world. Gen AI is simply obliterating the opportunities for mediocre/beginning artists the world over. Removing that incentive for this pool of people (which I am a part of in many respects) is, I believe, very damaging to the world for many reasons I won’t go into here.

Given all of these dynamics, it’s hard for me to a be a hard-liner towards indie artist/creatives concerning their use of gen AI to create the aspects of their output that they lack skill or interest in, and can’t afford to commission. I don’t LIKE it. I don’t encourage it. But it’s way more understandable than someone like Taylor Swift using it.

All of this reminds me of an idea I had a long time ago, that MUST be a common thought and therefore must exist somewhere. A marketplace for creatives to trade work-for-work. “I’ll make an album cover for you if you write a comic for me.” sort of thing. This would be tricky since it’s probably seldom the case that one creative needs exactly the same amount of work from another who also needs the exact kind/style of work… there would have to be some kind of credit ecosystem…

8. I’ve heard from some people that I trust that using “AI” has been a significant efficiency boost for them. I remain skeptical because whenever I ask for a detailed description of this, it seems like they are not accounting for the time they spend panning for that gold. I’m guessing the nuggets give them the satisfying emotional kick, and the drudgery of creating and scrapping the 5 previous attempts fades into a distant memory. This is likely something that people get better at with time, so I’m not assuming that this is the permanent state of the technology or workflow.

9. There are most certainly tasks and domains where LLMs provide opportunities that would be impractical or impossible to achieve otherwise. Specifically, I think the ability to search and synthesize vast amounts of data will unlock some cool things we haven’t considered yet. I’m especially intrigued by the idea of having an LLM train on my own work to help me find inconsistencies or other unintended issues. One of the innovations I’m attempting in the artform of transmedia worldbuilding is a completely internally consistent lore. The books won’t contradict the movies or games or comics, or whatever.

This is the kind of thing that presumably I could hire someone to do for me. Which leads back to my examination in point 7. However, I’m skeptical that this is even possible, given how there are no examples I’m aware of where a commercially successful IP has remained internally consistent. I’ve always assumed that I would need some kind of fancy application to track this kind of thing, especially as more artists and writers are contributing to the IP. Maybe no company has done this because they don’t think the benefits of a completely consistent world would outweigh the costs. Either way, it is one of a couple innovations I hope I’m bringing to the artform, and there are no systems I know of that do this, and no economically viable way for me to learn to do this, unless there’s an LLM solution.

Which puts me squarely in the position of those indie artists/creatives that I judge for using genAI to facilitate their overall brand. Well… kinda. Unlike the examples I gave of the author or musician who want cool cover art that can be done by a near infinite pool of currently existing talent, what I am attempting is something I’ve never heard of or seen done. Maybe this is one the applications of LLMs that the evangelists mean when they say new kinds of creativity will be possible. Either way, whether I’m engaging in post-hoc rationalization or really onto something, the ethical concerns I have around LLMs are greatly attenuated by the details of this potential application.

Enough to make me 100% comfortable? Absolutely not. But I’m also operating in capitalism, I’m using hardware that requires slaves, I’m using software that supports billionaires who are actively undermining democracy. There are very few things in my life that are not ethically compromised. This could be a whataboutism-structured argument. But that’s not what I’m trying to argue. I’m not saying that because I have little choice in these other ethically-compromised positions that one more compromise won’t make a difference. It’s more that there’s a threshold we all have concerning the compromises we can live with. (For examples of compromises I can’t live with: I have not used genAI to make any art, writing or music, though these are all things that would greatly benefit my goals.) But what I’m imagining in my head (this local integrity checker app) does not pass my threshold of compromises I can’t live with. Could such an application BE what is in my head? I don’t know. It would require exploration to discover that.

This article comes at the perfect time, you've absolutely nailed the ethical issues around LLM training data! Is there a pragmatic path to building truly ethical models?